Things tagged tech:

Dirty dealing in the $175 billion Amazon Marketplace

Josh Dzieza at The Verge:

For sellers, Amazon is a quasi-state. They rely on its infrastructure — its warehouses, shipping network, financial systems, and portal to millions of customers — and pay taxes in the form of fees. They also live in terror of its rules, which often change and are harshly enforced. A cryptic email like the one Plansky received can send a seller’s business into bankruptcy, with few avenues for appeal.

Sellers are more worried about a case being opened on Amazon than in actual court, says Dave Bryant, an Amazon seller and blogger. Amazon’s judgment is swifter and less predictable, and now that the company controls nearly half of the online retail market in the US, its rulings can instantly determine the success or failure of your business, he says. “Amazon is the judge, the jury, and the executioner.”

Via Schneier on Security.

Tech C.E.O.s Are in Love With Their Principal Doomsayer

Nellie Bowles in The New York Times with a profile of Yuval Noah Harari (use the archive link to bypass the paywall):

His prophecies might have made him a Cassandra in Silicon Valley, or at the very least an unwelcome presence. Instead, he has had to reconcile himself to the locals’ strange delight. “If you make people start thinking far more deeply and seriously about these issues,” he told me, sounding weary, “some of the things they will think about might not be what you want them to think about.”

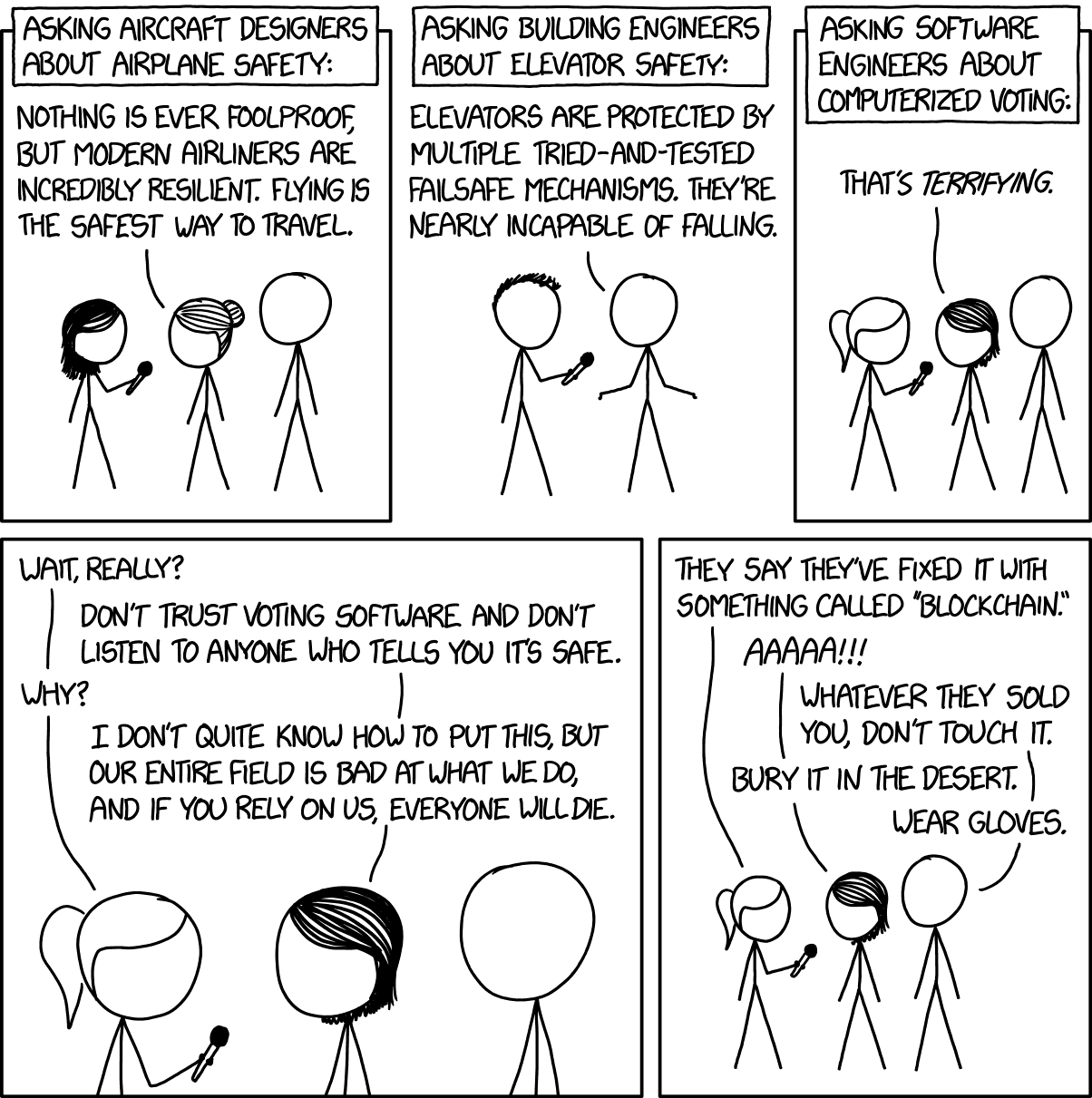

Voting Software

xkcd:

There are lots of very smart people doing fascinating work on cryptographic voting protocols. We should be funding and encouraging them, and doing all our elections with paper ballots until everyone currently working in that field has retired.

Dasher: information-efficient text entry

David MacKay:

Keyboards are inefficient for two reasons: they do not exploit the redundancy in normal language; and they waste the fine analogue capabilities of the user’s motor system (fingers and eyes, for example). I describe a system intended to rectify both these inefficiencies. Dasher is a text-entry system in which a language model plays an integral role, and it’s driven by continuous gestures.

More infro and apps to play with it at http://www.inference.org.uk/dasher/

In my opinion, physical keyboards will always win, as they have a 1 to 1 relationship between thinking a letter and accessing that letter (for alphabets with a reasonable number of characters anyway). But this is a stunningly amazing improvement over eye tracked onscreen keyboards, and a fun thing to play with. Check out the scanning box method in the phone version. For someone with severe physical disabilities I think this would be best in class?

The detection of faked identity using unexpected questions and mouse dynamics

Monaro M, Gamberini L, Sartori G in PLOS ONE:

The detection of faked identities is a major problem in security. Current memory-detection techniques cannot be used as they require prior knowledge of the respondent’s true identity. Here, we report a novel technique for detecting faked identities based on the use of unexpected questions that may be used to check the respondent identity without any prior autobiographical information. While truth-tellers respond automatically to unexpected questions, liars have to “build” and verify their responses. This lack of automaticity is reflected in the mouse movements used to record the responses as well as in the number of errors.

Via Schneier on Security.

The Making of TANK

Putting aside current 3D modeling techniques, Red Giant’s Chief Creative Officer Stu Maschwitz looked to the past and built a visual homage to vector arcade games of the 80’s entirely in Adobe After Effects, using math, code, and hundreds of hours of painstaking animation work.

Germany Acts to Tame Facebook, Learning From Its Own History of Hate

Katrin Bennhold in The New York Times (use the archive link to bypass the paywall):

Germany, home to a tough new online hate speech law, has become a laboratory for one of the most pressing issues for governments today: how and whether to regulate the world’s biggest social network.

François Chollet on Twitter

François Chollet, an AI expert at google on Twitter:

The problem with Facebook is not just the loss of your privacy and the fact that it can be used as a totalitarian panopticon. The more worrying issue, in my opinion, is its use of digital information consumption as a psychological control vector. Time for a thread

A Rubicon

Daniel E. Geer, Jr. at Hoover Institution:

Optimality and efficiency work counter to robustness and resilience. Complexity hides interdependence, and interdependence is the source of black swan events. The benefits of digitalization are not transitive, but the risks are. Because single points of failure require militarization wherever they underlie gross societal dependencies, frank minimization of the number of such single points of failure is a national security obligation. Because cascade failure ignited by random faults is quenched by redundancy, whereas cascade failure ignited by sentient opponents is exacerbated by redundancy, (preservation of) uncorrelated operational mechanisms is likewise a national security obligation.

Drive Chains with Paul Sztorc

Paul Sztorc has finally figured out how to communicate about what Drive Chains is, and here is a good hour and a half of that.

Pro-Neutrality, Anti-Title II

Ben Thompson at Stratechery:

I believe that Ajit Pai is right to return regulation to the same light touch under which the Internet developed and broadband grew for two decades. I am amenable to Congress passing a law specifically banning ISPs from blocking content, but believe that for everything else, including paid prioritization, we are better off taking a “wait-and-see” approach; after all, we are just as likely to “see” new products and services as we are to see startup foreclosure.

One law professor's overview of the confusing net neutrality debate

Orin Kerr presents Gus Hurwitz on The Volokh Conspiracy:

The most confounding aspect of the contemporary net neutrality discussion to me is the social meanings that the concept has taken on. These meanings are entirely detached from the substance of the debate, but have come to define popular conceptions of what net neutrality means. They are, as best I can tell, wholly unassailable, in the sense that one cannot engage with them. This is probably the most important and intellectually interesting aspect of the debate - it raises important questions about the nature of regulation and the administrative state in complex technical settings.

The most notable aspect is that net neutrality has become a social justice cause. Progressive activist groups of all stripes have come to believe that net neutrality is essential to and allied with their causes. I do not know how this happened – but it is frustrating, because net neutrality is likely adverse to many of their interests.

What it would take to change my mind on net neutrality

Tyler Cowen at MR:

Keep in mind, I’ve favored net neutrality for most of my history as a blogger. You really could change my mind back to that stance. Here is what you should do.

Farewell - ETAOIN SHRDLU

A film created by Carl Schlesinger and David Loeb Weiss documenting the last day of hot metal typesetting at The New York Times.

“It’s inevitable that we’re going to go into computers, all the knowledge I’ve acquired over these 26 years is all locked up in a little box called a computer, and I think most jobs are going to end up the same way. [Do you think computers are a good idea in general?] There’s no doubt about it, they are going to benefit everybody eventually.”

–Journeyman Printer, 1978

A Model of Technological Unemployment

There is a bit of a backlash against UBI in the economics community at the moment, which I think is unfortunately due to a lack of awareness of potential changes to the labor market coming. Here is a paper by Daniel Susskind talking about why mainstream economics may have it wrong on the labor issues:

The economic literature that explores the consequences of technological change on the labour market tends to support an optimistic view about the threat of automation. In the more recent ‘task-based’ literature, this optimism has typically relied on the existence of firm limits to the capabilities of new systems and machines. Yet these limits have often turned out to be misplaced. In this paper, rather than try to identify new limits, I build a new model based on a very simple claim – that new technologies will be able to perform more types of tasks in the future. I call this ‘task encroachment’. To explore this process, I use a new distinction between two types of capital – ‘complementing’ capital, or ’c-capital’, and ‘substituting’ capital, or ‘s-capital’. As the quantity and productivity of s-capital increases, it erodes the set of tasks in which labour is complemented by c-capital. In a static version of the model, this process drives down relative wages and the labour share of income. In a dynamic model, as s-capital is accumulated, labour is driven out the economy and wages decline to zero. In the limit, labour is fully immiserated and ‘technological unemployment’ follows.

Personally I see huge changes coming in the near to medium term (5-40 years), such by the end of that about 40% of currently employed population will have no jobs available to them. (If minimum wage laws hold, that would be literately no jobs, if minimum wage laws fall, then it means jobs that pay only sustenance level). That will cause major changes to the economy of course. Goods will be produced at much lower cost, but how will people who don’t have jobs buy them? This is where UBI begins to make sense to me.

Then in medium to long term we have the potential of AI singularity, in which case, who knows. Even if no singularity, the automation encroachment on refuge labor will continue …

Via MR.

How Do I Crack Satellite and Cable Pay TV?

If you are intrested in hardware reverse enginereering in crypto systems, this is a very accessable talk.

Display Prep Demo

A cinematographer makes an attempt to preform a digital vs. film comparison. In a very particular way. I think he fails to reach the goal he set out for himself, but I don’t believe that the theory he presents is incorrect. In a few years the iteration in digital imaging tech, and the math to preform the transforms will progress, and we will be there. Then this silly debate will be over :)

See also this revealing conversation he had with a film purist:

People are so religious about this that they’re resistant to even trying. But not trying does not prove it’s not possible.

If you believe there are attributes that haven’t been identified and/or properly modeled in my personal film emulation, then that means you believe those attributes exist. If they exist, they can be identified. If they don’t exist, then, well, they don’t exist and the premise is false.

It just doesn’t seem like a real option that these attributes exist but can never be identified and are effectively made out of intangible magic that can never be understood or studied.

To insist that film is pure magic and to deny the possibility of usefully modeling its properties would be like saying to Kepler in 1595 as he tried to study the motion of the planets: “Don’t waste your time, no one can ever understand the ways of God so don’t bother. You’ll never be able to make an accurately predictive mathematical model of the crazy motions of the planets — they just do whatever they do.”

Security and the Normalization of Deviance

Professional pilot Ron Rapp has written a fascinating article on a 2014 Gulfstream plane that crashed on takeoff. The accident was 100% human error and entirely preventable – the pilots ignored procedures and checklists and warning signs again and again. Rapp uses it as example of what systems theorists call the “normalization of deviance.”

The point is that normalization of deviance is a gradual process that leads to a situation where unacceptable practices or standards become acceptable, and flagrant violations of procedure become normal – despite that fact that everyone involved knows better.

I think this is a useful term for IT security professionals. I have long said that the fundamental problems in computer security are not about technology; instead, they’re about using technology. We have lots of technical tools at our disposal, and if technology alone could secure networks we’d all be in great shape. But, of course, it can’t. Security is fundamentally a human problem, and there are people involved in security every step of the way.

I have seen this personally many times, you can be sloppy several hundred/thousand/whatever times, and it doesn’t bite you, so you come to believe that being sloppy has no risk, and then boom out of “nowhere” a failure. When you look back and analyze the failure you will find that this complacency for the new normal of sloppy behavior is the root cause.